The response is not in a JSON format

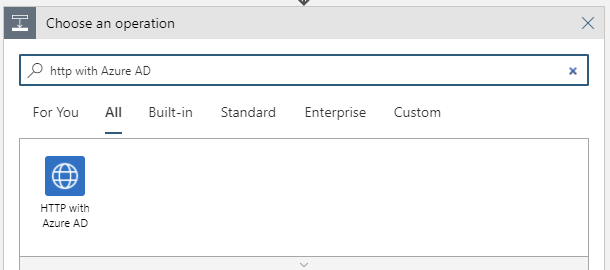

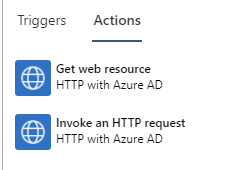

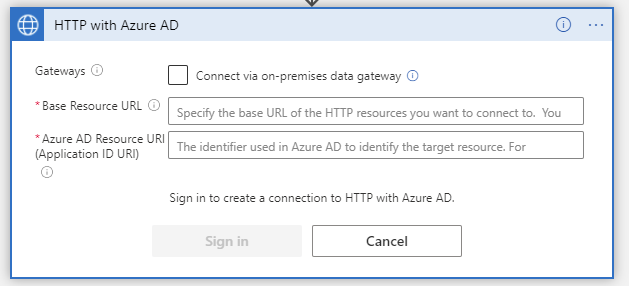

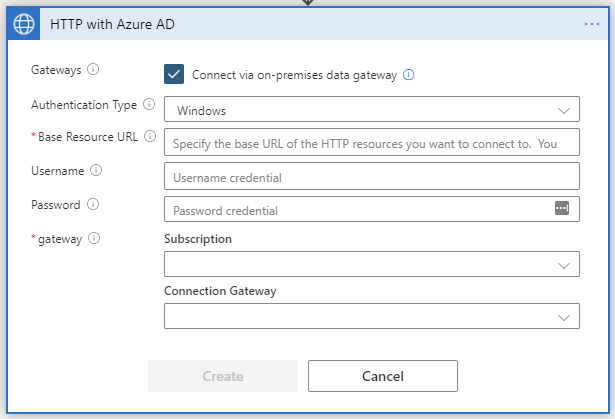

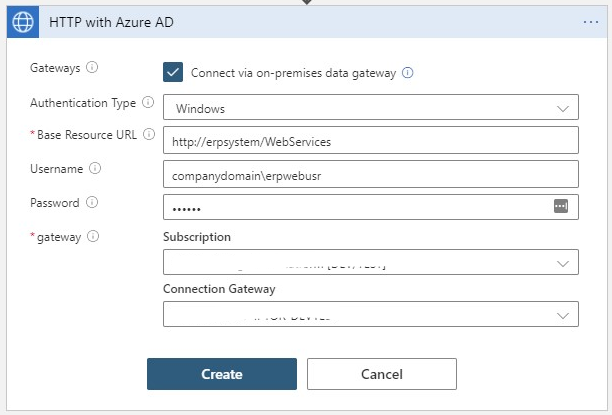

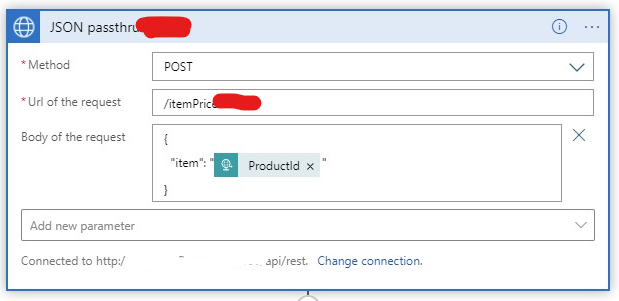

Have you been using the HTTP with Azure AD connector lately? It´s really a game-changer for me. No more custom connector is needed, unless you want to. I wrote a whole how-to post about it.

The problem

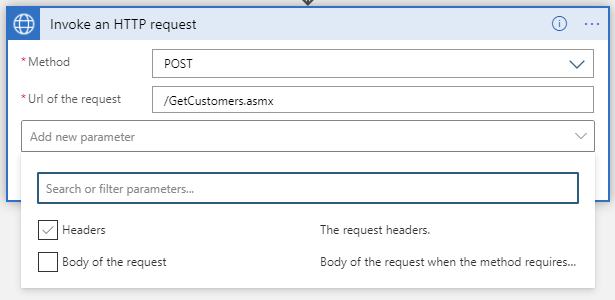

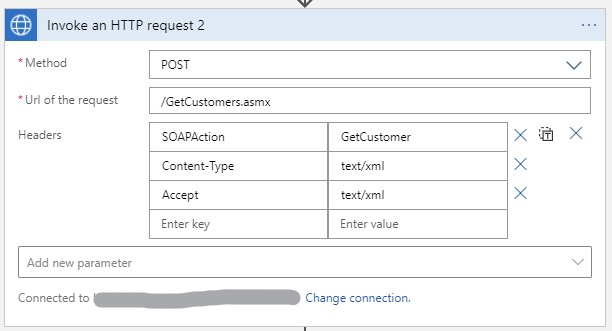

I was using the connector to access an on-prem web service, "in the blind". I had some information about the message that should be sent but was not sure. I was trying out different messages when I got this strange error back:

{

"code": 400,

"source": <your logic app's home>,

"clientRequest": <GUID>,

"message": "The response is not in a JSON format",

"innerError": "Bad request"

}Honestly, I misinterpreted this message and therein lies the problem.

I was furious! Why did the connector interpret the response as JSON? I knew it was XML, I even sent the Accept:text/xml header. Why did the connector suppress the error-information I needed?

The search

After trying some variants on the request body all of a sudden I got this error message:

{

"code": 500,

"message": "{\r\n \"error\": {\r\n \"code\": 500,\r\n \"source\": \<your logic app's home>\",\r\n \"clientRequestId\": \"<GUID>\",\r\n \"message\": \"The response is not in a JSON format.\",\r\n \"innerError\": \"<?xml version=\\\"1.0\\\" encoding=\\\"utf-8\\\"?><soap:Envelope xmlns:soap=\\\"http://schemas.xmlsoap.org/soap/envelope/\\\" xmlns:xsi=\\\"http://www.w3.org/2001/XMLSchema-instance\\\" xmlns:xsd=\\\"http://www.w3.org/2001/XMLSchema\\\"><soap:Body><soap:Fault><faultcode>soap:Server</faultcode><faultstring>Server was unable to process request. ---> Data at the root level is invalid. Line 1, position 1.</faultstring><detail /></soap:Fault></soap:Body></soap:Envelope>\"\r\n }\r\n}"

}And now I was even more furious! The connector was inconsistent! The worst kind of error!!!

The support

I my world, I had found a bug and needed to know what was happening. There was really only one solution: Microsoft support.

Together we found the solution but I still would like to point out that error message that got me off track.

The solution

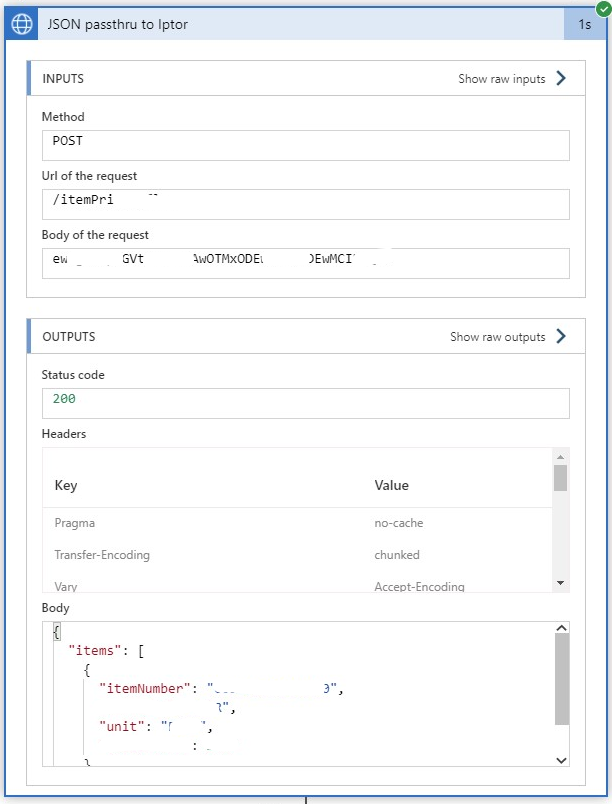

First off! The connector did not have a bug, nor is it inconsistent; it is just trying to parse and empty response as a JSON body.

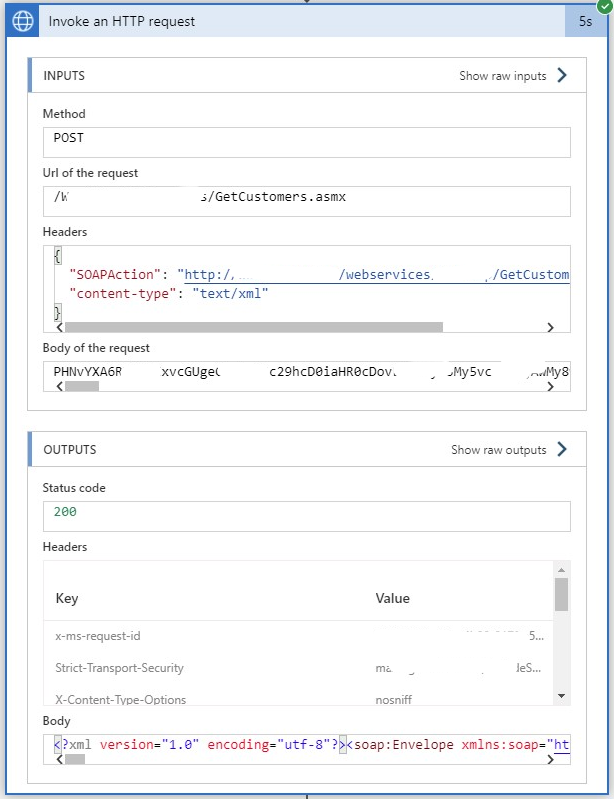

Take a look back at the error messages. They are not only different in message text, but in error code. The first one was a 400 and the other a 500. The connector always tries to parse the response message as JSON.

Error 500: In the second case (500) it found the response body, and supplied the XML as an inner exception. Not the greatest solution, but it works.

Error 400 In the first error message the service responded back with a Bad request and an empty body. This pattern was normal back when we built Web Services. Nowadays, you expect a message back, saying what is wrong. In this case, the connector just assumed that the body was JSON, failed to parse it and presented it as such.

If we take a look at the message again perhaps it should read:

{

"code": 400,

"source": <your logic app's home>,

"clientRequest": <GUID>,

"message": "The response-message was emtpy",

"innerError": "Bad request"

}Or The body length was 0 bytes Or The body contained no data

Wrapping up

Do not get caught staring at error messages. You can easily follow down the trap of assumptions. Verify your theory, try to gather more data, update your On-Premise Data Gateway to the latest version, and if you can: try the call from within the network, just like old times.