Recently I had the opportunity to use Logic Apps in a much more “locked down” Azure environment, than I am used to, and I found some interesting things.

Logic Apps support for VNET

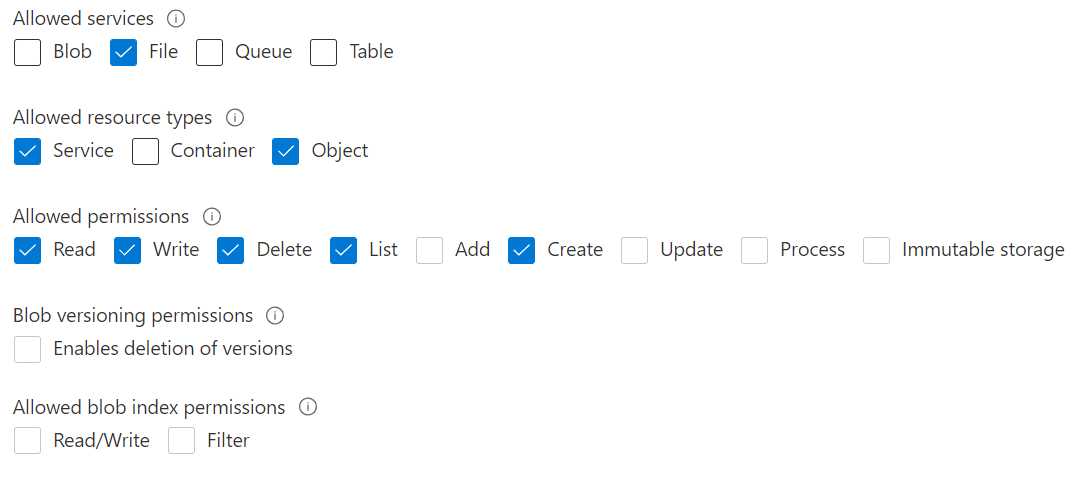

Famously, your Logic Apps share the space with other customers on its servers as it is a share service. This makes it very easy to maintain and very cheap to run enterprise grade stuff. But famously Logic Apps cannot be assigned to a particular vnet. This does not hold true for the Logic Apps ISE, but that was off the table in this case.

This does not mean that it is unsecure, and this client made it possible to use Logic Apps despite the locked down environment as long as we:

- Accessed all Logic Apps thru another service connected to a vnet. In this case we used Azure API management Premium.

- Whitelisted only the APIM’s IP-address for a Logic App, unless

- The Logic App was called by another Logic App, in which case we used that option.

Limits of Logic Apps

First off you should always have the Logic Apps Limits and Config in your favorites, not because you often hit them, but you should be aware that there are limits. One section is particularly interesting in this case, the one on firewall configuration and IP-addresses.

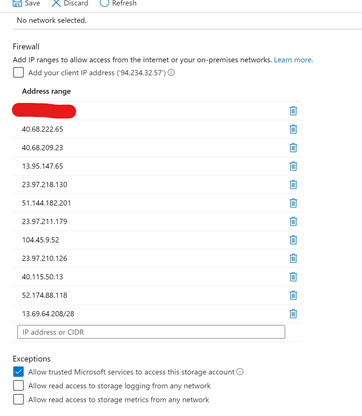

Allowing access to a resource

When you want to open a firewall for Logic Apps deployed in a particular region, you look up the IP addresses in this list and configure the firewall/network security group. This means that the resource is then potentially available to all Logic Apps in that region. Therefore, you need to protect the resource with an additional layer, such as a SAS-key.

This is how we allowed access between our Logic Apps, and the Azure SQL server instance. In that case we also used credentials as an additional layer.

To allow access you simply need to find your region in the list and then allow exceptions for the IP-addresses listed.

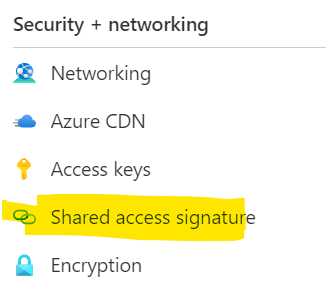

Allowing access to a storage

Now here is when things started to “head south”.

Thanks to a support case I generated the text has been updated and it now reads (my formatting for emphasis):

Logic Apps can’t directly access storage accounts that use firewall rules and and exist in the same region. However, if you permit the outbound IP addresses for managed connectors in your region, your logic apps can access storage accounts that are in a different region except when you use the Azure Table Storage or Azure Queue Storage connectors. To access your Table Storage or Queue Storage, you can use the HTTP trigger and actions instead.

What you need to do

If you are using blob or file storage, you do not need the last step, but if you are using Table Storage or Queue Storage, you need to do all these steps.

The Storage and Logic App cannot be in the same region

Move the Logic App accessing the storage to the paired region. For us, we have the storage in North Europe and the Logic Apps in West Europe.

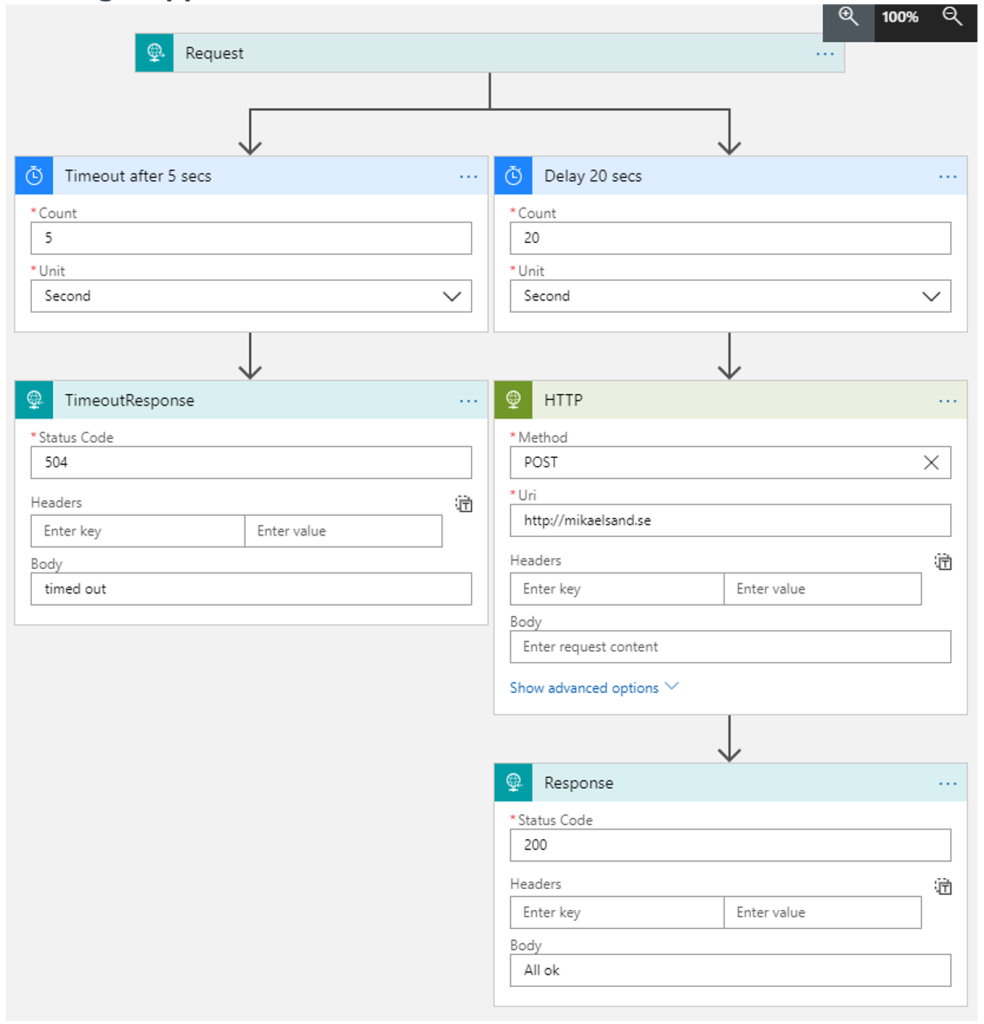

Update the storage firewall to allow IPs from Logic Apps

Finding all the IPs for your Logic App is easy. Just go to this link: https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-limits-and-config#firewall-configuration-ip-addresses and scroll down to find the Outbound addresses. You need to add all the IPs as well as the Managed Connectors IPs.

Here is a tip: Since you need to add IP-ranges using the CIDR format in the storage firewall, and some IPs are just listed as ranges, you can visit this page to convert them.

Here is another tip: You can find the IP-addresses of the affected Logic App under Properties for the Logic App.

Here is my updated storage firewall after adding everything:

If you are using blob and files storage, you are done.

Update your Logic Apps for Table Storage

We did not use queue storage, so I have no input on that. However, my guess is that it is basically the same.

The connector for Table Storage will still not work, so you need to call the API directly. As a matter of fact, I really liked that way much better as it gives a granularity that the connector does not support. The ins and outs of this will be covered in a separate post.

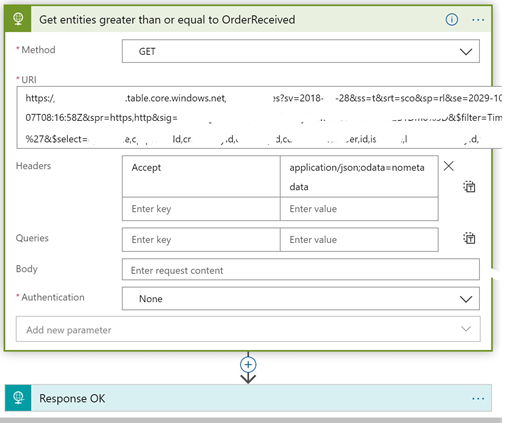

We changed the connector from a Table Storage Connector to an HTTP Connector and configured it like this (sorry for the strange formatting):

he documentation on how to use the API directly can be found here: https://docs.microsoft.com/en-us/rest/api/storageservices/query-entities

To summarize

Having to enable the firewall in an Azure Storage might be necessary. Logic Apps, as well as other Azure Services, has issues with this. To solve it for Azure Table Storage you need to:

- Place the Logic App in another region (datacenter).

- Use the API directly with the HTTP connector

Storage is a bit strange in some aspects, not only for Logic Apps an strange things can happen.