A while ago I posted an easy how-to guide for calling APIs in Dynamics 365 Finance and Operations (or D365 F&O to its friends). In that post I looked for statuses of batch jobs. This is the follow up post on how to get notified when a batch job is done.

Batch job and alerting

You can configure a batch to send you an e-mail when the batch is done, or errored, but an e-mail is not very computer to computer friendly. I needed a response back so the next action (or batch) could be executed.

If you only need an e-mail when a batch is done. I suggest you check out this page.

I am actually surprised that this is not a feature in D365 but it is easy enough to build using Logic Apps.

The D365 APIs

If you need information on connecting to the APIs you can find that in my last post Talking to the D365 F&O Rest APIs.

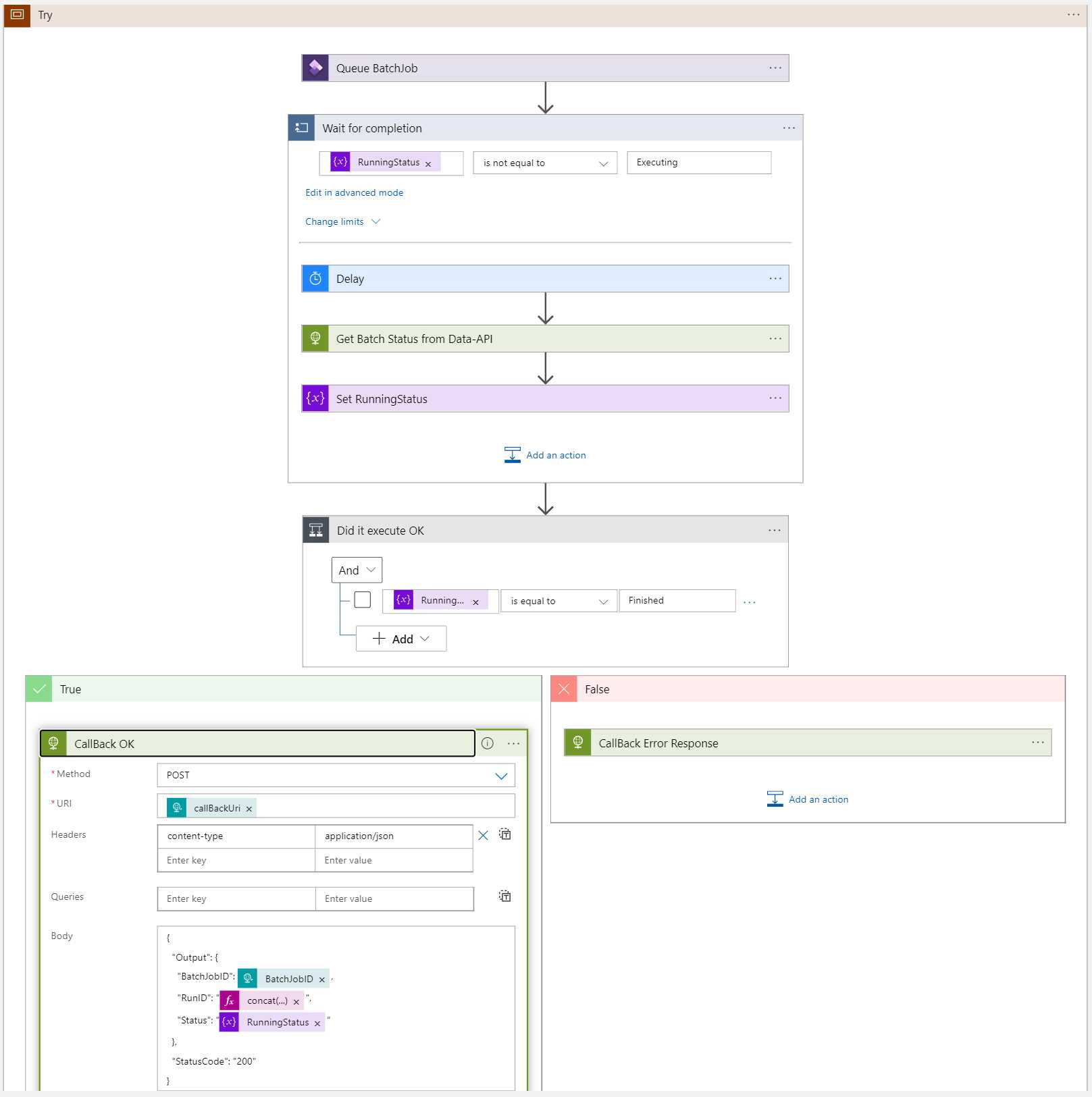

The secret here is to use two features: A callback Url and a do-while loop.

What is a callback URL?

Glad you asked. When you call an API the operation might take a long time to complete, such as creating a new order or running a batch. Instead of either waiting for a very long time or getting a timeout, you give the call a callback URL. This URL is basically saying “When you are done, reply to this address”.

When calling such an endpoint you supply the callback URL as a header or part of a message body, and the service should reply with a 202 Accepted, meaning “I received your message and will get back to you.”

Setting up a callback URL is not the scope of this post. In my scenario it was handled by Azure Data Factory and was just a tickbox.

The Logic App flow

Your exact needs may differ, but we decided on getting a batch ID and the callBackURL as properties of the POST body.

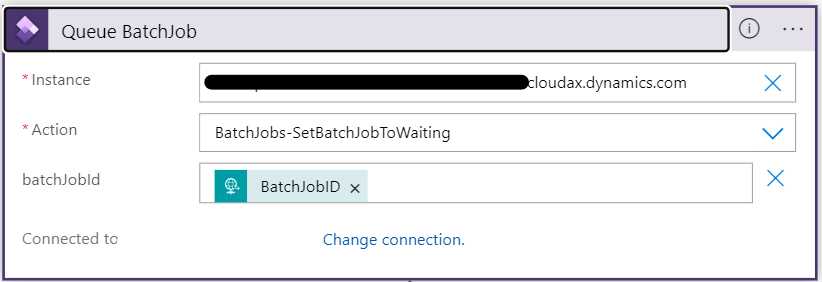

The flow started the batch using the standard Logic Apps connector.

Then the Logic App simply queries the “Batch Status” in the D365 Data API until the batch is not executing anymore.

When the batch is done, or there is an error of some sort, we respond back using the callback URL.

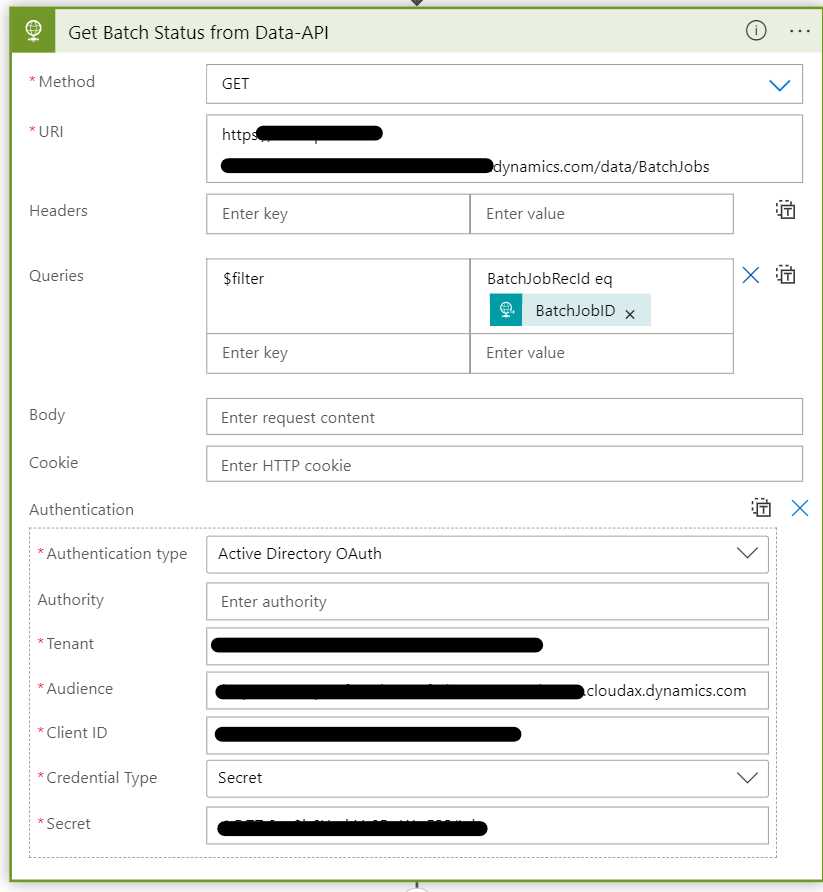

Here is what the Get Batch Status from Data-API looks like:

Details about the call can be found in the earlier post.

A warning!

This will not work on so called “scheduled batch jobs”. The reason is that if you trigger them, ie set to waiting, they will just continue to wait until the scheduling kicks in. In the Logic App above this will respond back as an error.

If you need to be able to trigger the jobs imediatly, you need to remove the scheduling. But that is kind of the reason why you need to do this anyway.

Tips for testing

If you trigger the Logic App using Postman you cannot really handle the callback and you need to setup another endpoint for your Logic App to respond to. I used RequestBin, which solves this problem 100%.

Conclusion

Setting this up is really easy and I am sure you can do it using Power Automate. I am just a Logic App guys and likes solving things using Logic Apps. Adding this to your Azure Data Factory or similar makes you able to call a pipeline “when the first one is done” instead of using scheduling.