This thing drove me crazy. Batsh*t insane, but it is all better now because I found the solution.

First off, thanks to Mark Brimble for volunteering his help on how he solved this issue as well as a very useful blog post on XML to JSON in Liquid templates.

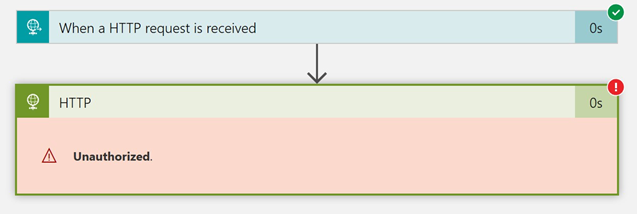

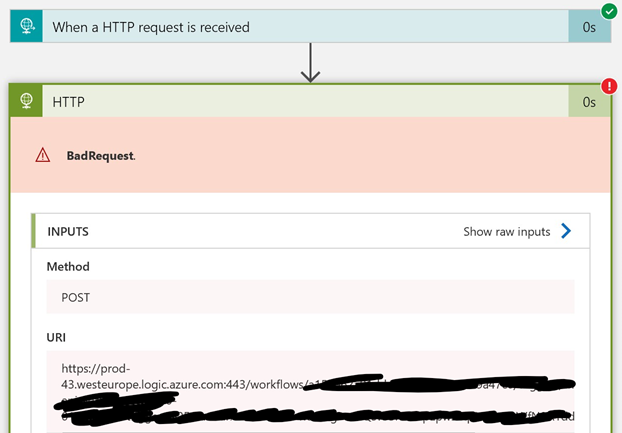

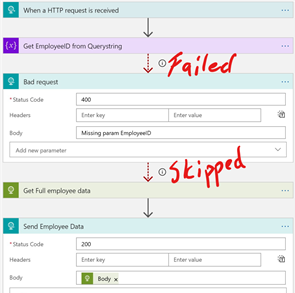

The issue

You need to call a SOAP service that returns XML and you want to use Liquid templates instead of XSLT, because it is new and fresh and fun. You use the SOAP passthru as it is the most flexible way of calling a SOAP service.

The response body might look a little like this:

<Soap:Envelope xmlns:Soap="http://schemas.xmlsoap.org/soap/envelope/">

<Soap:Body>

<ns0:PriceList_Response xmlns:ns0="myNamespace">

<PriceList>

<companyId>1</companyId>

<prices>

<price>

<productId>QWERTY123</productId>

<unitPrice>1900.00</unitPrice>

<currency>EUR</currency>

</price>

</prices>

</PriceList>

</ns0:PriceList_Response>

</Soap:Body>

</Soap:Envelope>You transform the payload into JSON and what to put it thru a Liquid template.

{

"Soap:Envelope": {

"@xmlns:Soap": "http://schemas.xmlsoap.org/soap/envelope/",

"Soap:Body": {

"ns0:PriceList_Response": {

"@xmlns": "myNamespace",

"PriceList": {

"companyId": {

"#text": "1"

},

"prices": {

"price": {

"productId": "1",

"unitPrice": "19.00",

"currency": "DKK"

}

}

}

}

}

}

}How do you access the “price” property, in this payload? This will not work:

{{ content.Soap:Envelope.Soap:Body.ns0:PriceList_Response.prices.price.unitPrice }}The colon is a special char

in Liquid templates and I found no way of \”escaping\” it.

The solution

The way I solved this was by using the [ and ] characters to encapsulate the name, as a string, of the property I wanted to access. That way you can access the property or object using whatever string you like. This means you can access the unitprice above like this:

{{ content.['Soap:Envelope'].['Soap:Body'].['ns0:PriceList_Response'].prices.price.unitPrice }}I was so happy not to be insane anymore.

A tip

Accessing properties deep in a document can be quite tasking if you need to use a sting like that to get property values in your Liquid template. Use the ability to assign an object to a variable. This means that you can get a Liquid template that looks like this for assigning prices using the payload above:

{% assign price = content.['Soap:Envelope'].['Soap:Body'].['ns0:PriceList_Response'].prices.price.unitPrice %}

"prices": [

{

"ProdId": "{{price.productId}}",

"ProdPrice": "{{price.unitPrice}}",

"Curr": "{{price.currency}}"

}

]

There is an elephant in the room, or at least a logotype with an elephant. The product is called PostgreSQL and is a capable, open source, relational database engine. More info can be found

There is an elephant in the room, or at least a logotype with an elephant. The product is called PostgreSQL and is a capable, open source, relational database engine. More info can be found